Blended Rank Product Video

Learn how Rosetta, Golf Channel, Marriott, and Adobe utilize Blended Rank

Marketers analyze their search metrics and ask themselves: what positions do I rank in, what keywords are driving traffic, what pages are converting, and how’s my lead volume, revenue and conversions? These metrics inform priorities that impact critical business decisions that contribute to the bottom line. Whether an organization manages a website with dozens of pages or hundreds of thousands of pages across multiple business units and domains, having accurate analytics data is critical to success.

So just how big is the search dataset? In a word, massive. Search engines process a tremendous amount of data including: search queries, location specific searches, social signals, and crawls for new content. Behind Google’s search algorithm is a Big Data company that processes over 20 petabytes of data each year. Beyond the search engines, social networks also process a massive dataset, which is an important consideration due to the positive correlation of social signals on search rankings. Facebook processes 2.5 billion pieces of content and over 500 terabytes of data each day. That’s 300 million photos and 2.7 billion Like actions each day. And, Twitter processes over 400 million tweets each day.

In addition to processing massive amounts of data, search engines like Google and Bing are constantly refining their algorithms, and adjusting the search engine results page (SERP). In August of 2012, Google changed the SERP for many pages with site links and rolled out shorter pages with only seven links instead of 10. This significant change impacts numerous industries. Many organizations have seen a decrease in page one rankings, and a decrease in traffic for keywords in positions 8-10, that now appear on page two instead of page one.

As Google makes changes, organizations need technology with Big Data processing power capable of analyzing this massive data stream. Many technologies in the industry query in groups of 100 (num=100) by modifying Google results in order to display 100 results on a single page. This method produces statistically inaccurate data, and contaminates business metrics.

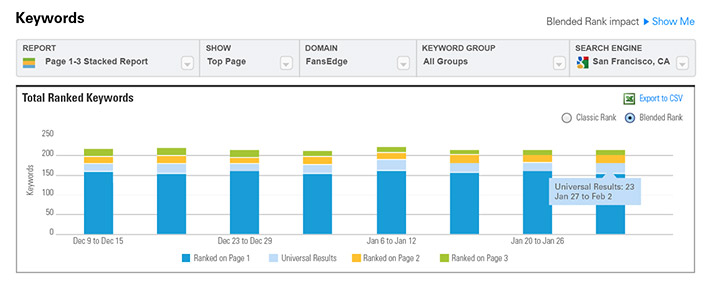

The BrightEdge commitment to data integrity includes crawling individual pages (rather than using num=100 logic) to make sure that the data in the platform is accurate. In addition, with proprietary Blended Rank technology, developed in June of 2012, BrightEdge ensures that as Google evolves the SERP, the search ranking data provided in dashboards are representative of what a user sees. Other technologies claim to measure Universal results, however the actual numerical count and the position on the page do not reflect what a user actually sees. BrightEdge is the only platform to have Blended Rank technology to show actual placement amongst text, image, video, social, and local results.

BrightEdge at its core is a Big Data company, dedicating significant engineering resources to create rigorous and high quality set of data streams stemming from a commitment to delivering customer success.

BrightEdge Data Integrity at a glance:

Most accurate data — Unique blended rank technology ensures rank is a true representation of a users experience. Crawls multiple times a week to ensure data accuracy.

BrightEdge puts Big Data in the hands of business users by processing complex data in innovative ways.

Below are just some of the data intensive unique technological innovations in the BrightEdge platform:

Social Signals: Twitter Trending, and Facebook Preferred Marketing Developer (exclusive)

Data integrity is extremely important to our business. We’ve validated the data provided in BrightEdge, and have found it’s consistently accurate.